There has been more and more talk about something called SmartNICs. Development originally started with AWS as an internal project for their cloud, simplifying their network operations and making the physical network devices simpler, dumber, and easier to manage. To me, this evolution is the next step in the networking layer.

The reason why is simple. For the last few years, the industry is moving away from complicated configurations in individual network devices, to simpler configurations in centrally managed controllers. Abstracting away complexity while we scale, is key there. By moving the network configuration to the server NICs, instead of the top of rack (ToR) switches, the physical network fabric can be a lot simpler and really just act as a transport layer. SmartNICs would be able to connect to the network using regular static routing, or dynamic routing using BGP or MPLS.

VMware recently announced Project Monterey, where they’re working with NVIDIA, Pensando, and Intel to bring a complete solution to the market. Most of the architecture and specifications that follow below, are from Pensando – but all SmartNICs are similar in design.

SmartNIC Architecture

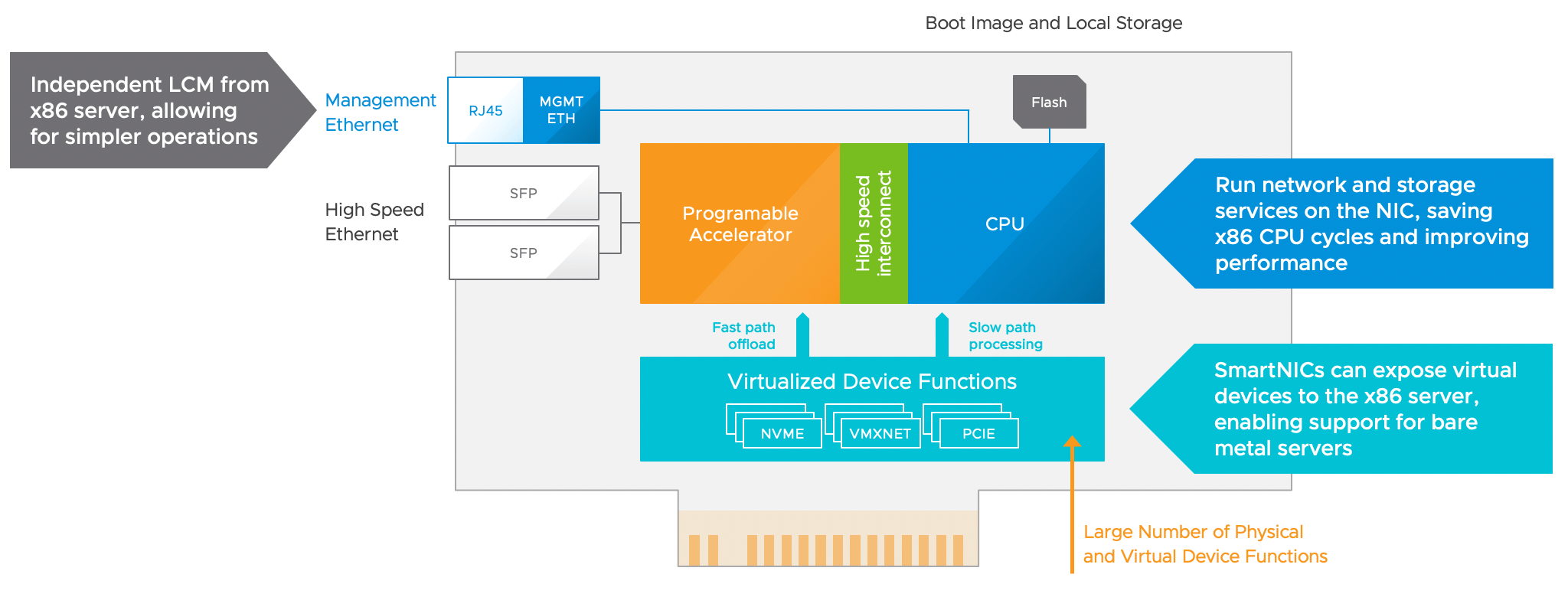

But first, let’s have a look at the architecture that makes up a SmartNIC. Essentially, the NIC has a compute layer on it, making it possible to run custom software on the NIC itself. The compute layer can control the programmable ASIC that serves the network traffic and can perform specific network functions.

The ASIC also provides an extra security layer between the physical network cable and the regular server OS, because there’s a programmable network device built into the NIC (instead of having that in the ToR switch).

Source: Announcing Project Monterey

Source: Announcing Project Monterey

To the server OS, the SmartNIC presents virtual interfaces based on the configuration you put in. This reminds me a lot of the Cisco UCS architecture with the Fabric Interconnects and the Virtual Interface Cards. They present either vNICs or vHBAs to the OS, only SmartNIC takes it a step further with the Virtualized Device Functions in the NIC directly (and without the need for special uplink devices).

When the NIC can perform the network isolation and functions, all we need in the physical network topology is reachability. The NIC will make sure the network traffic is separated going from and to servers, either using traditional VLANs or an overlay like VXLAN or Geneve.

Virtualized Device Functions

In the architecture, there is a parallel layer that runs Virtualized Device Functions. If the programmable ASIC does not have a specific network function, there’s the ability to extend that layer. By essentially punting traffic towards the SmartNIC CPU, it can redirect that traffic to a service that’s running on the NIC. Software vendors will be able to run their own services on that CPU, making it possible to run any and all kinds of network functions.

Of course, running those services in the ASIC is a bit quicker, which is why they have the basic networking functions like routing, firewalling, telemetry, load balancing, NAT, and overlay networks, built-in. Still, running those services on the SmartNIC CPU, instead of forwarding the traffic to the server OS, should still be quicker (time will tell).

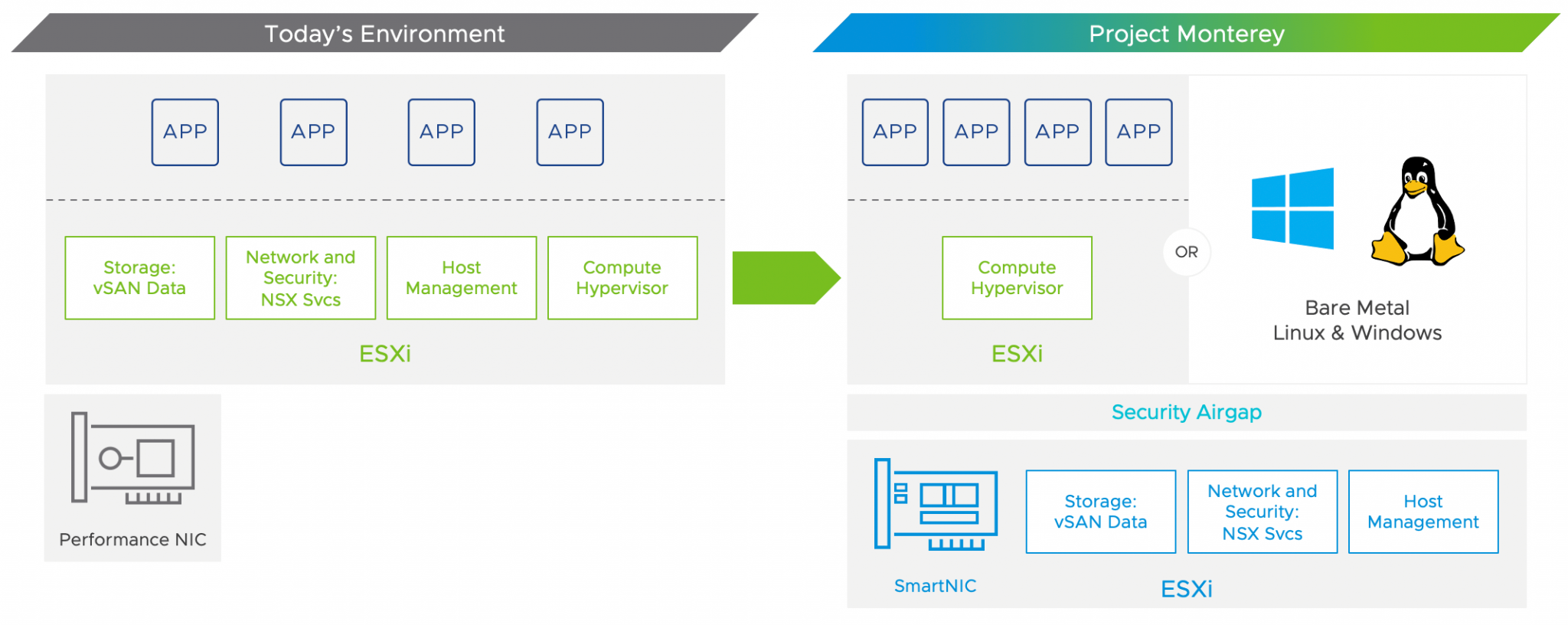

Imagine VMware NSX Data Center running on these SmartNICs. Instead of taking CPU cycles of the main CPU, making use of the security boundary between the SmartNIC and the OS, and have the ability to inject network services into the NIC directly, would open up a lot of use cases.

Source: Announcing Project Monterey

Source: Announcing Project Monterey

NSX would run in the NIC and no longer the server OS. Not in the ESXi kernel anymore, or using the bare metal agent. The only compatibility would be with the SmartNIC, meaning that the OS compatibility matrix should be limitless.

Storage

As the NIC is typically a part of the same bus as the storage inside the server, SmartNICs can also talk directly to the storage in the server. So, not only would VMware NSX be able to run on the SmartNIC, but also VMware vSAN. The network traffic vSAN generates can be wildly different from environment to environment, but it can definitely be chatty.

1) It’s a distributed storage system, so everything gets replicated at least once to another server, 2) with HCI Mesh, remote clusters can use each other’s storage.

There should be no doubt that skipping the OS for these remote storage actions, will improve performance.

Built-in features around remote storage (like NVMe over TCP) also allows you to present a vHBA to the server OS, just like Cisco UCS.

Performance

We’re going fast everywhere these days. SmartNICs are no exception. For example, Pensando has two cards: the DSC-25 and DSC-100. Here’s a small glance of what they can do:

- DSC-25 has 2x 10 or 25Gbit interfaces, can forward 50Gb/s L4 stateful traffic, do 24M PPS unidirectional, and hold 2M routes. All while only drawing 20W of power.

- DSC-100 has 2x 100Gbit interfaces, can forward 100Gb/s L4 stateful traffic, do 40M PPS unidirectional, and hold 2M routes. While drawing a max of 36W of power.

These numbers are ridiculous, and I can’t wait to get my hands on some of these to test these theories. 😉

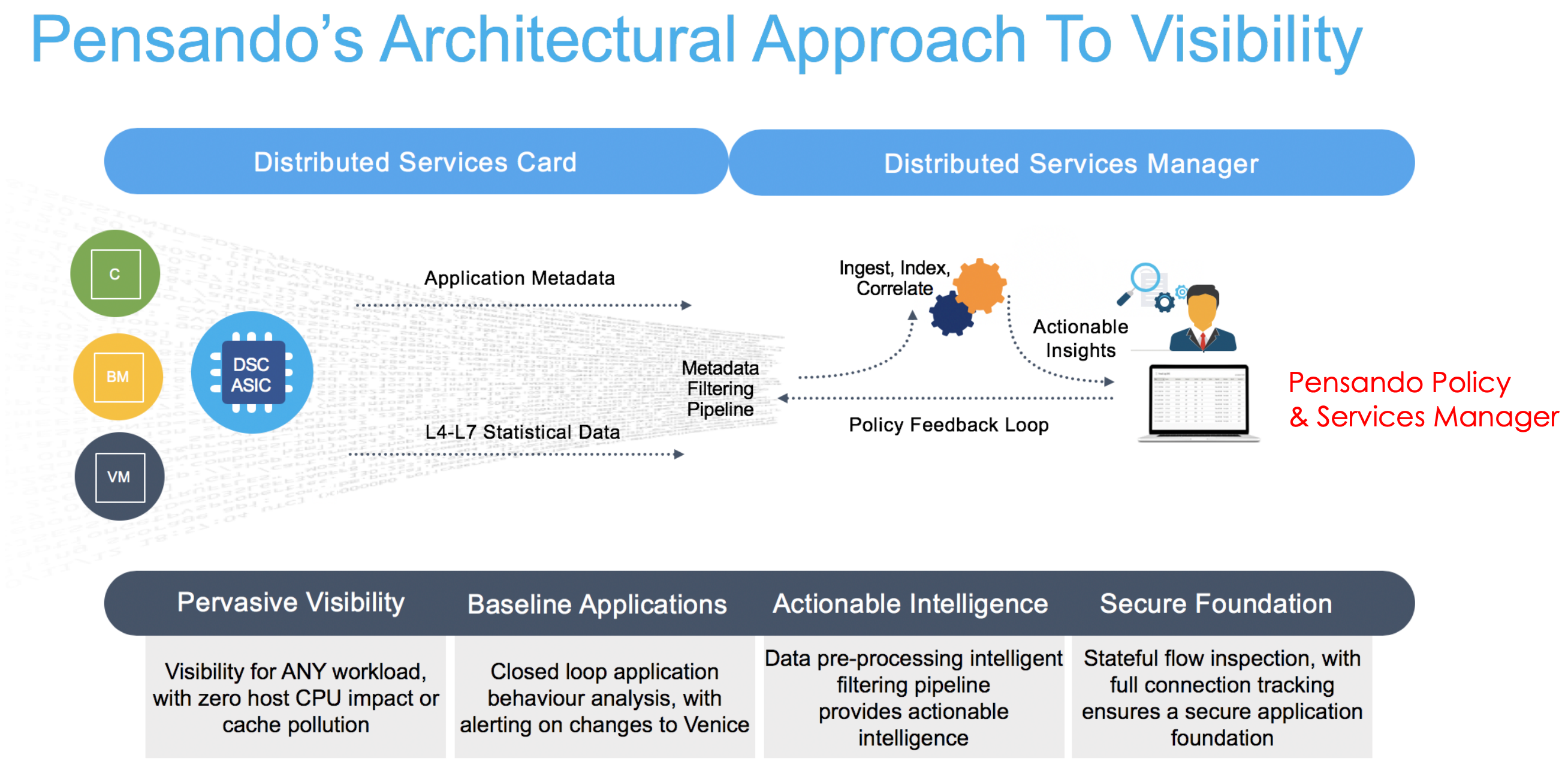

Observability

Good visibility and monitoring are key for a decentralized network; it’s harder to know exactly what’s going on when you can’t just log into a single device and do a packet capture. Luckily, all these SmartNICs have built-in observability with pretty impressive functionality. Things like NetFlow (with added fields for segment sizes, application info, firewall rule actions), packet inspection to get L4 to L7 information, flow logging, round-trip-time latency tracking, all of that is in there. Most of this data is also available via the SmartNIC APIs, so other products (like vRealize Network Insight) could consume it.

Source: Deep Observability with the Pensando Distributed Services Platform

Source: Deep Observability with the Pensando Distributed Services Platform

Conclusion

The performance, security, and extensibility of these SmartNICs will make them the next step in networking evolutions. Moving network functions from central network devices to decentralized, directly on the server. Offloading of network and storage functions from the main CPU and the OS to the NIC hardware, will make for interesting gains in performance and reducing the load on the main CPU. Intrinsic observability to monitor and troubleshoot the network. All of these reasons are why I can’t wait for SmartNICs to be the default.

Virtual Cloud Network

Project Monterey is the start of SmartNIC software development for VMware. While it’s still a tech preview, VMware is definitely full steam ahead in incorporating the network software into these NICs and making them a foundational part of the Virtual Cloud Network by integrating NSX.

Learn more about the Virtual Cloud Network vision from Pat Gelsinger, Rajiv Ramaswami, and Tom Gillis here: https://www.vmware.com/modern-network-events.html

Learn More

- https://blogs.vmware.com/vsphere/2020/09/announcing-project-monterey-redefining-hybrid-cloud-architecture.html

- https://pensando.io/documents/

- https://nvidianews.nvidia.com/news/nvidia-announces-industrys-first-secure-smartnic-optimized-for-25g

- https://www.nextplatform.com/2019/10/31/hypercalers-lead-the-way-to-the-future-with-smartnics/

- Project Monterey Tech Field Day: https://www.youtube.com/watch?v=9v9Z0lF5bfg

November 17, 2020 at 19:27

Nice summation that really captures the differentiation. I would have liked to learn more about VMware’s SmartNIC partners and how VMware is going to normalize the power of SmartNICs across multiple vendor implementations.

Thanks