Secure Logging from vRealize Network Insight

Date

Tue Feb 11

Author

Martijn

By default, the syslog capability in vRealize Network Insight only supports UDP on port 514, sending the messages in cleartext. It’s important to have Network Insight send its logs somewhere, though, as they can be useful when troubleshooting Network Insight itself.

To be clear, these logs contain information about the Network Insight platform and collector appliances. Logs on processing incoming data, errors when the collector is unable to connect to a data source (vCenter, switch, NSX, router, etc.). If you’re looking for logs on network changes (the network that Network Insight monitors), look at the System and User-Defined Events.

vRealize Log Insight Agent

It’s a well-kept secret that both the platform and collector appliances have a vRealize Log Insight agent installed on them. This vRLI agent can be configured via the CLI on the appliances. After configuring the vRLI agent on the appliances, it will start sending the same logs as the syslog configuration, over the vRLI API using HTTPS, so it’s encrypted.

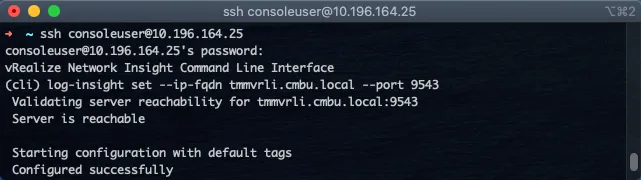

Here’s an example on how to configure it:

In the above example, I used tmmvrli.cmbu.local as the vRLI server (can also be an IP instead of a hostname) and port 9543 - which is the HTTPS port for the vRLI API.

Obviously, this requires a vRealize Log Insight instance. If the desired target is another log repository (i.e., Splunk), vRLI can be set up to forward logs that come from Network Insight. That forward can then use secure syslog, to make the chain secure.