VMworld Europe took place in Barcelona last week and my experience was mostly centered around NSX. A few technical sessions and a lot of meetings later, I have been given an insight into the future of virtual networking and it’s awesome! In a mini series (otherwise it’d be much too long and windy) of posts, I am going to discuss my interpretation on how this will look.

Disclaimer: this post is based on public roadmap information divulged by VMware (mostly the great mind Bruce Davie) and can be subject to change. Also, some of this is my interpretation of the features.

VMware NSX is currently a full-grown networking product. The foundation of logical separate networks, distributed routing/firewall and edge services are production ready and provide the basis for the next generation of networking, which VMware and its partners are working on. This part will focus mostly on integration between the virtual and physical network.

Hardware integration & Management from the Virtual Layer

Top-of-Rack (ToR) switches have been adapting VXLAN for a while now, working towards a fully integrated stack with the virtual and physical layer to be managed as one. Vendors like Arista, Brocade, Cumulus, HP and Juniper have been integrating their hardware switches with an open VXLAN network, allowing them to serve as VXLAN Tunnel End Points (VTEP) inside the NSX transport network. In a standard NSX deployment, only your ESXi hosts will participate in the VXLAN network, allowing VXLAN traffic to traverse only between ESXi hosts. When integrating ToR switches into this transport network, the network hardware will become aware of the existence of the virtual networks and you will open up your network to endless possibilities to integrate the virtual and physical layer. I will be elaborating on a few of those below.

Bridging Virtual and Physical Networks

In some cases, it is useful to have virtual machines and physical servers in the same layer-2 broadcast domain. For example; certain back-up solutions still require it, or when you have an application that needs to be on physical hardware and uses broadcast to communicate to its workers (the virtual machines). Very ugly, but they are out there.

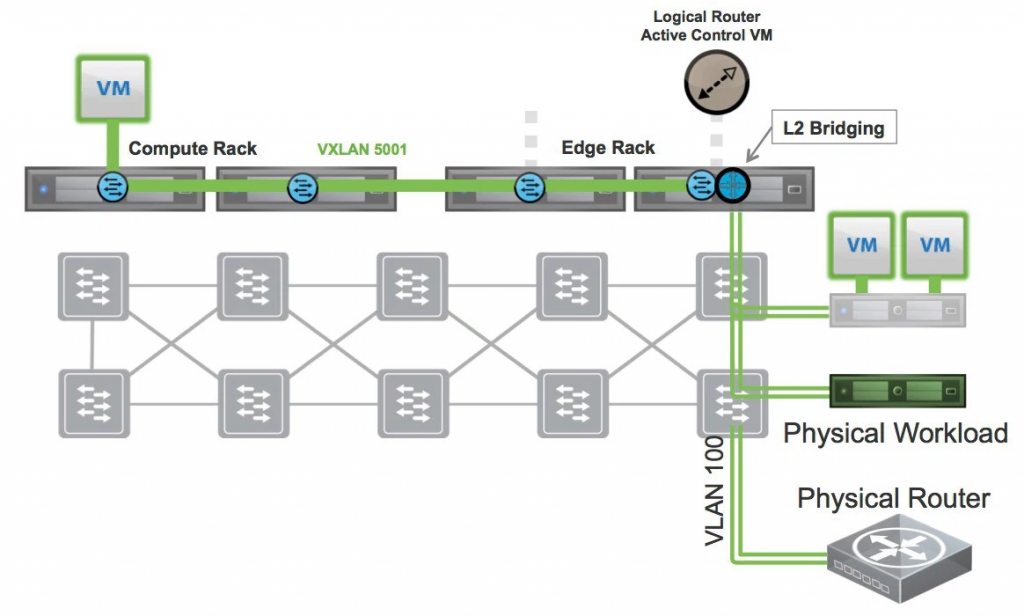

Before NSX 6.2, the only way to bridge between virtual and physical (VXLAN to VLAN, respectively) was to create a NSX Distributed Logical Router and define a L2 Bridge on it. It would effectively create a VXLAN to VLAN bridge on the ESXi host where the active Control VM resides. This means all the bridged traffic flows over a single ESXi host. It works perfectly, but it’s not the best solution in terms of scalability.

In NSX 6.2, VMware added the support of hardware VTEPs inside the VXLAN network of your ESXi hosts, the first step of integrating the physical and virtual world and basically solving the scalability constraints of doing VXLAN to VLAN bridging with the Logical Router.

Currently, only multicast transport zones and configuration transfers via OVSDB are supported. So there are two ways to have capable hardware switches to interact with NSX. First, if you configure the hardware switches in the same multicast group as the ESXi hosts (Segment ID pool and Multicast addresses), the switches will learn the virtual network information from the ESXi hosts themselves.

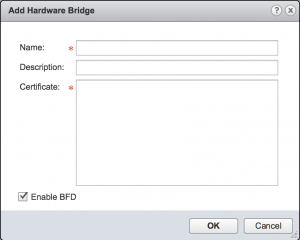

Secondly, you can have NSX Controllers talk to the hardware switches with OVSDB selectively. With selectively, I mean you have to add the switches (or the controller(s) of those switches) to NSX manually using a SSL certificate the hardware switch will provide as security. You do this in the “Service Definitions” and under the “Hardware Devices” page.

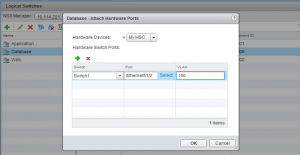

Once you’ve added the connection, the NSX Controllers will discover the hardware switch(es) and you can use them to configure physical ports to be inside the virtual networks (Logical Switches). Simply select a logical switch, select the action “Attach Hardware Ports”, select the hardware switch you previously added and add the physical ports you want to connect to the Logical Switch.

You might wonder about the VLAN ID mentioned in the screenshot below. This awesome little nugget is a way to created a trunked interface, trunking the logical switch network as VLAN ID X to the physical port. If you leave this as VLAN ID 0, the physical port will be an access port.

Juniper has a great video on this, displaying how easy it is to connect to their QFX platform and connect a physical port to a logical switch, all from NSX:

Distributed Routing in the Physical Network

Extending the logical switches to the VXLAN network and allowing physical ports to be connected to the Logical Switches was the first step. The second step is to pull the distributed services currently provided by NSX into the physical layer. This is future stuff, not currently available.

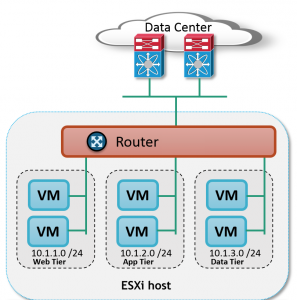

The current NSX Distributed Logical Router lives in the kernel of ESXi hosts, allowing very efficient and quick packet forwarding between different subnets. It allows you to connect up to 1,000 Logical Switches and pushes routing information to the ESXi host kernel, so that it can forward packets through the shortest path available (VM->VM or VM->Other ESXi host->VM).

When integrating this with the physical network hardware, NSX will provide the routing information to the hardware switches through the OVSDB protocol and the switches basically create a local switch virtual interface (SVI) throughout the physical network to create the ability to route between different logical networks. Distributed is the key differentiator here.

In this example, there are two ESXi hosts and two hardware VTEP-capable switches. Both ESXi and hardware switches are meshed in the same VXLAN transport network and the hardware switches are registered with NSX as devices capable of distributed functions. When you create a Logical Switch, it is pushed towards all ESXi and hardware switches so you can easily bridge virtual and physical ports. When you create a Logical Router, it would also be pushed to both the ESXi hosts (through NSX native protocol) and to the hardware switches (through OVSDB), creating a complete distributed routing layer. Packets would be routing through the most efficient paths.

This also creates a cool new way to optimise routing inside the physical network, without creating large configurations on each switch and add a lot of complexity to the network. If implemented in a largely physical workload network, this will optimise routing paths immensely.

Distributed Firewall in the Physical Network

One of the key features of VMware NSX is the Distributed Firewall. The possibility to make sure that all services are only allowed to send and receive the traffic that they are allowed is the future of security. Being able to define a small certain set of security policies (web can talk to app over 443, app can talk to db over 3306, etc) and have that applied to your entire virtual infrastructure is revolutionary. But what happens if you extend the physical network into the virtual network?

The quick answer is nothing; the Distributed Firewall on your ESXi hosts will continue to work as configured. However, the physical workloads would not have the original Distributed Firewall module because they would be connected to a physical switch, which reverts the traffic behaviour to the traditional behaviour; everything in the same logical network can talk to each other.

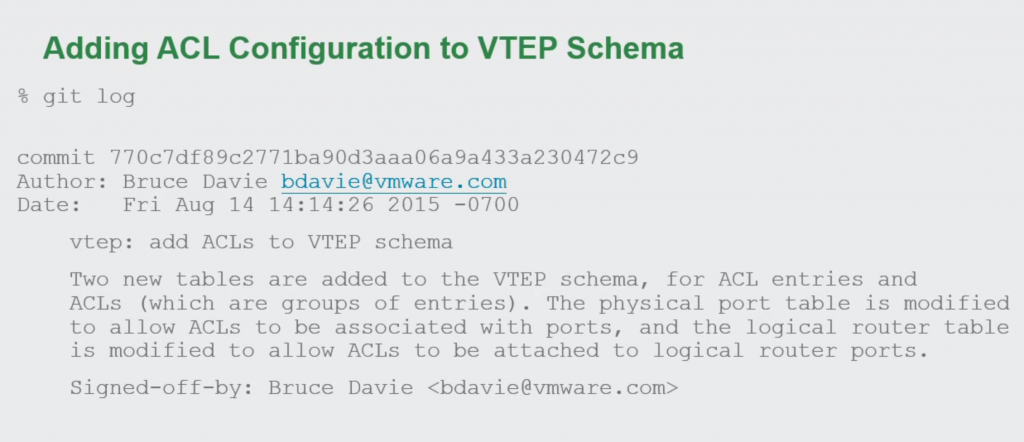

This is why VMware has already extended the OVSDB protocol to support access-lists (ACL) configuration being sent to the hardware switches with the VTEP Schema. This is necessary to remotely push ACLs to physical hardware. Vendors are currently already implementing this schema change and I suspect this feature will be available pretty quick in switches from Arista, Juniper and Cumulus.

With being able to remotely configure ACLs on the physical switches, NSX would be able to take the defined security policies and translate them to the proper format and push them via OVSDB to the physical network, providing the same Distributed Firewalling capabilities in the physical network and securing it.

Considering this would be pushed through OVSDB, the hardware switches (or controller(s)) would have to be registered within NSX for this to work. I’ve also heard the statement that configuration of the Distributed Firewall policies would remain exactly the same (web can talk to app over 443, app can talk to db over 3306, etc) over the virtual and physical network, making it stupid simple to implement a security policy across all layers at the same time.

I assume they would also be working on an implementation similar to the Service Composer for the physical network, so that you can insert third party services (IPS/Anti Malware/etc) inside the physical network, just as you currently can do within the virtual network. But that’s speculation. 😉

That’s part 1 of my mini series of the future of virtual networking..I hope you enjoyed this part and join me for part 2 of this excited journey after this commercial break!

Leave a Reply