Network admins hate stretching VLANs across data centers, we absolutely hate it. It causes potential instability on a inter-data center scope, destroys our isolated fault domains; something happens with VLAN X on site A, it also can take down site B (unless you take special precautions). I spent a few hours last week and the week before to help out customers that had that exact issue, which triggered this post.

The entire idea of stretching VLANs between data centers is about virtual machine mobility. You can do a failover between sites and don’t have to make adjustments to your applications (IP address changes and IP references). Most of the time VLAN stretching comes from the business RTO requirement and the fact that most (traditional) applications can’t execute a failover on the application layer, without changing their make-up.

With Network Virtualization (or network overlays in general), we can finally put an end to this. I’m a practical person, so I’m going to lay out an example using VMware NSX and a stretched vSphere cluster in the rest of this article (as that introduces some challenges). Of course you can use just VXLAN and solve it with manual configuration, but why take the hard way when you can take the NSX way. 😉

Stretching the Application Networks

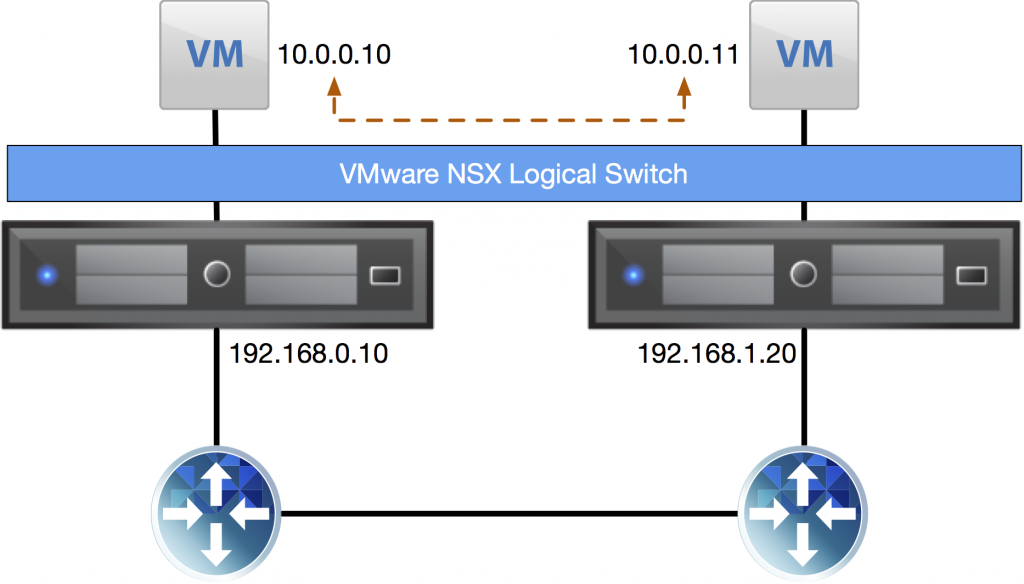

Using VXLAN (link), you create a virtual (a.k.a. in software) network overlay on top of your physical network. The best part of the VXLAN technology, is that it can formulate layer-2 networks on top of a layer-3 networks. The applications think they are on a layer-2 network, but the real traffic being sent is going between ESXi hosts on a layer-3 basis.

Transport Network

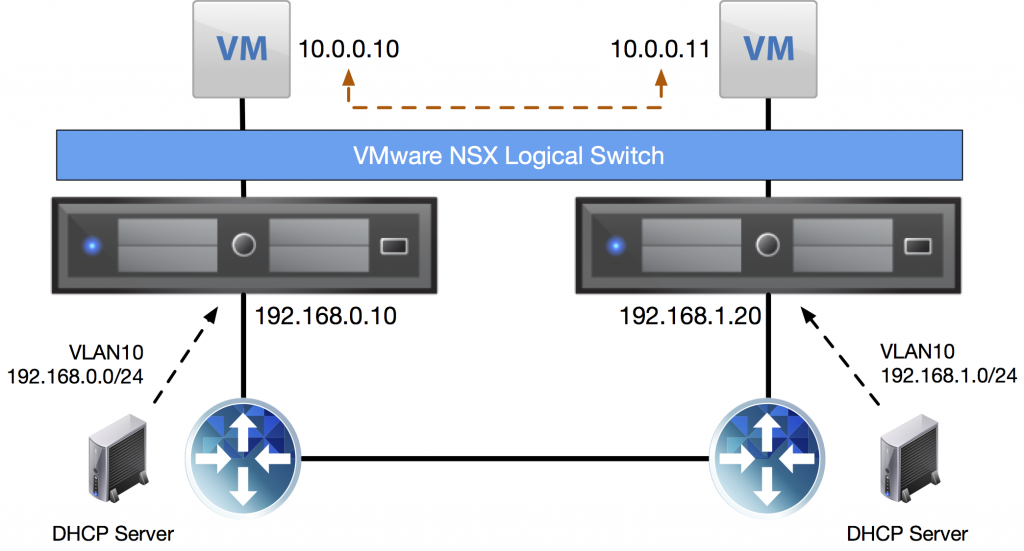

NSX needs a VXLAN transport network to function. This network makes sure the application networks can reach each other. In the picture above, the IP addresses 192.168.0.10 and 192.168.1.20 are on the VXLAN transport network. The two hosts are on different subnets, so there’s a router involved to create connectivity. Here’s where you currently need a trick to get this configuration in a stretched cluster with NSX.

You’ve got two options to provide the ESXi hosts on the VXLAN transport netwerk with IP addresses; an IP Pool or DHCP. With an IP Pool you create an object inside NSX with a single gateway and a range of IP addresses, which you can only apply on the entire cluster, so no difference between datacenter A and datacenter B.

When using DHCP for the VXLAN transport network, you provide the cluster with a single VLAN ID and the ESXi hosts go out and do DHCP requests to get an IP address. The trick I was talking about involves using the same VLAN ID in both datacenters, but not stretching it. You then configure different DHCP servers per datacenter and have them lease out a different subnet for the transport VLAN.

That way you can get around the fact that you can only apply one IP Pool to a stretched cluster or apply 1 VLAN ID. No need to stretch the VXLAN transport network. 🙂

vSphere HA Isolation Option

Now that you’ve split up the VXLAN transport network, there’s another thing you should be aware of. Inside a vSphere HA Cluster, there’s an advanced option called das.isolationaddress[…] which you can use to have an ESXi host determine whether it’s online or cut off from the network. It’s basically a ping to the gateway and if it (constantly) fails, the ESXi host goes into failover mode.

When virtualizing the network, all your production network traffic can/will go over the VXLAN transport network. It’d be good to have the ESXi hosts track the connectivity on that network and act appropriately when that network fails.

If you’re using the DHCP setup I described previously, the ESXi hosts have two different default gateways; one per datacenter. In order to properly monitor those default gateways in the vSphere HA cluster, you need to add two entries for the das.isolationaddress[…] setting with both default gateway IP addresses:

- das.isolationaddress0 - 192.168.0.1

- das.isolationaddress1 - 192.168.1.1

Other Networks

vMotion, Management, Storage (if you really want to) and Fault-Tolerance can work when routed over different networks. vMotion used to have the requirement to be on the same layer-2 network (same subnet), but that time has long gone. Considering you can have different host profiles (or manual configurations) per ESXi host in the same cluster; use different vmkernel ports in different VLANs per datacenter and keep the VLANs for those ports local.

Conclusion

Now if you’re a network person, you might say “well, what about tech like OTV?” - well, those technologies just solve a few symptoms of the problem, they don’t solve the problem itself. Plus you need to throw specific hardware at the solution, hairpin your traffic and you can’t solve it in a distributed, future-proof way.

There’s probably a few details that I haven’t covered (there are a few design guides for that), but the main take away should be; do yourself a favor and don’t stretch your VLANs but virtualize your network.

Leave a Reply